How to improve survey response quality

Just like it takes time and effort to create an innovative product or implement an incredible customer experience, it takes time and effort to design a good survey. Whether you want to get the pulse on a specific touchpoint or understand what drives your consumers, you want to ensure your survey respondents will provide you with valuable data. So how can you make sure that participants will give you responses you can use, and how can you quickly dismiss those who are just rushing through to get to the compensation?

Weed out unfocused respondents

Distracted, unfocused, or impatient respondents sometimes negatively impact otherwise insightful research. When we're aware of these offenders, we can intentionally design our survey to discourage negative behaviors. As you receive completes, watch out for:

Distracted, unfocused, or impatient respondents sometimes negatively impact otherwise insightful research. When we're aware of these offenders, we can intentionally design our survey to discourage negative behaviors. As you receive completes, watch out for:

-

Speeders: participants who complete a survey in an unrealistic amount of time

-

Straightliners: participants who choose the same answer over and over (creating a straight line of selected answers) or choose answers to form a pattern

Every time you field a quantitative survey or online discussion, you should take steps to label and remove respondents who don't seem to be putting thought into their answers before results are analyzed, ensuring reliable and accurate data.

Measure the time it takes to complete the survey

A speeder threshold is typically established for each study to catch those hurrying through the survey. Various methods are used to establish this cutoff, including examining the mean, median, and standard deviation, as well as looking for a natural break or thinking through what is reasonable for various audiences or paths through the survey. Importantly, a speeder threshold should be applied with objectivity to avoid “cherry picking” certain respondents to keep or remove.

Set a straightliner threshold

Some studies are particularly vulnerable to straightlining and warrant an additional quality control check. In surveys with large batteries of similarly phrased questions (such as an attitudinal battery), we examine the data for straightliners. This process generally involves mining the data for respondents who use the same scale point to answer multiple questions in a row. Because this is done on a case-by-case basis, the straightliner threshold will be unique to each study. Once a straightlining threshold is established, it can be applied across the board without discretion.

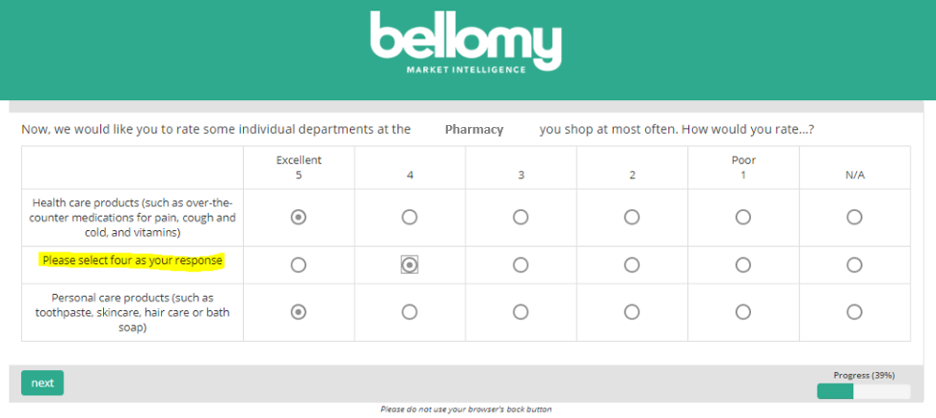

Use a red herring question

There are some precautions to take when designing the survey itself. "Red herring" questions embedded among survey questions can identify speeders or otherwise disengaged respondents, as well as bots. The term "red herring" was coined by English journalist William Cobbett in the 1800s. He wrote a fictional story about how he used a red herring as a boy to throw hounds off the scent of a hare. In market research, the red herring is a way of catching respondents off-guard as they race through the survey.

When to insert a red herring question into a survey

This is an effective method for identifying speeders and straightliners, especially in surveys that are longer, more complex, or require grid questions with many options. We understand that some respondents may have strong, positive opinions on a long list of topics. However, choosing "excellent" when the question asks that you "select four as your response" is a signal of an unfocused respondent. Respondents who incorrectly answer a red herring question should be flagged, have their responses removed, and taken to the end of the survey.

Examine verbatims

While there is a lot that goes into removing the bad respondents from our data, there is just as much effort that goes into ensuring we are collecting unbiased, quality responses. Open-ended questions allow respondents to express themselves clearly and thoughtfully. Using an open-end question in a screener survey can serve as a quality check to ensure you are recruiting people who will give complete and thoughtful answers during future research.

Verbatims can also confirm speeder or straightliner cutoffs. Once speeders and straightliners are flagged, and their responses to open-ended questions are complete and well-thought-out, you may want to modify the threshold initially set.

Look for contradictions in answers

Contradictions in respondents' answers are another red flag - for instance, changing their position on a brand or behavior at different points in the survey. These inconsistencies present themselves in both closed-ended and open-ended data. However, we appreciate it when a respondent self-identifies if they answered a question incorrectly. Since many surveys don't allow respondents to go back to change an answer, it's helpful when they admit they may not have answered a question truthfully and explain what they meant to share.

As researchers, we're always striving to provide the most accurate data as possible. No matter the type, length, or subject, surveys should be designed in a way that prompts respondents to answer questions thoughtfully, and those responses should be scrutinized and filtered to result in a representative, trustworthy data set. Contact us to get started on designing a survey that delivers useful data.

- smartlab